Large Model Support in Akrio

Haraldur Darri Thorvaldsson May 6, 2024

Haraldur Darri Thorvaldsson May 6, 2024 Arkio is designed to run on standalone headsets like the Meta Quest, with no desktop computer fiddling nor dangling wires getting in the way of a great user experience. The Quest, however, is essentially a mobile phone strapped to your face and running off battery power. Its Graphics Processing Unit (GPU) has between a tenth and a twentieth of the rendering power and memory of a recent desktop computer and can only render around half a million triangles (polygons) before it becomes overwhelmed. Meanwhile, the architectural 3D models our users wish to explore in VR routinely grow to millions or tens of millions of triangles! So how do we bridge this gap and enable Arkio to render 3D models an order of magnitude larger than the device can handle?

Luckily, we can use two properties of light and vision to our advantage: that objects appear smaller and less detailed as they recede away and that objects can obscure other objects from view. Hence, we can substitute further-away objects with ever more simplified versions (called Levels of Detail, LODs) and the difference will barely be noticeable. We remove completely those objects fully obscured by other objects. This way, we can render only a small portion of a model's triangles while giving the appearance of rendering it fully. The end result is that you, our dear user, can have virtual walkthroughs of models otherwise much too heavy for mobile devices.

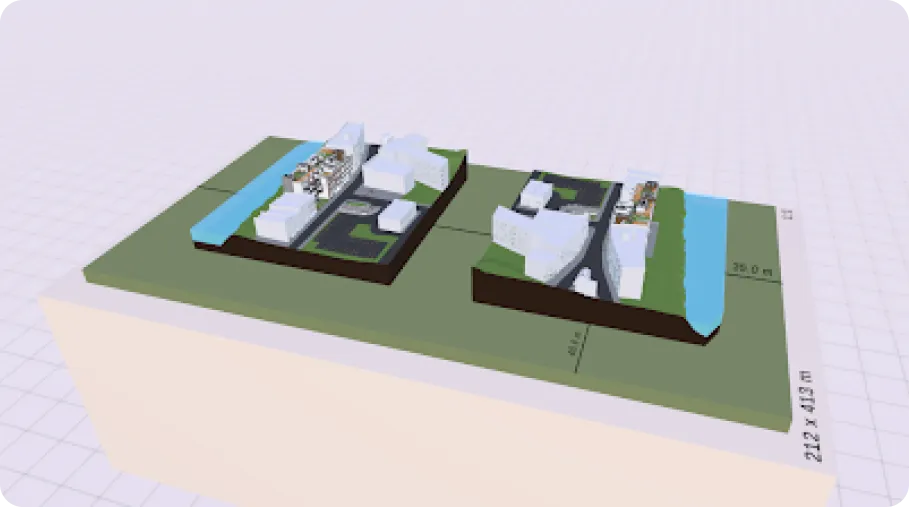

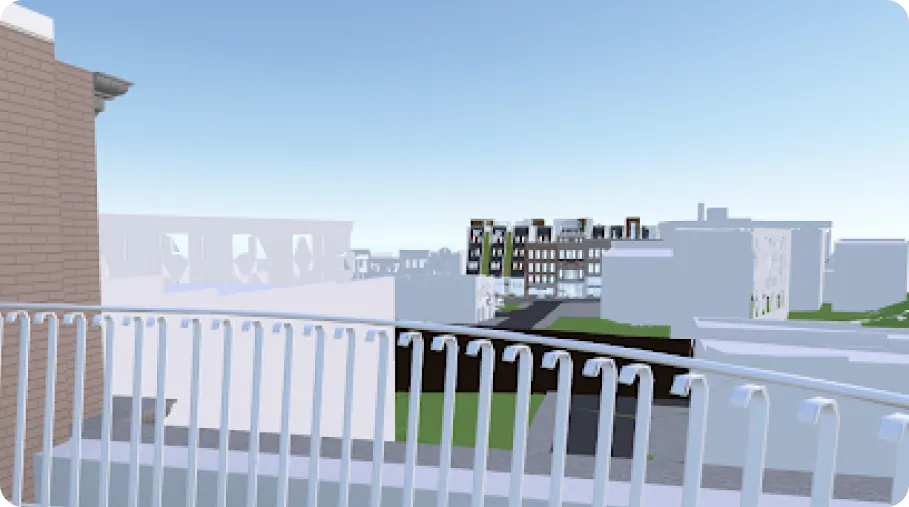

Note that it's not enough to render different LODs for individual objects like the building pictured above since some parts of the building are close to your viewpoint while others are further away. For this reason, Arkio chops models up into smaller parts during model import so it can choose the LOD for each part individually at run-time. The model above is split into almost 700 parts. This means you see nicely detailed trees and chairs from the vantage point shown above while parts further away and inside the model are replaced with (drastically!) simplified versions.

You generally don't notice these simplified versions since as you navigate towards and area, Arkio sneakily swaps in more detailed parts for that area. At the same time it swaps in less detailed parts for the area you are now moving away from and, especially, areas that are now obscured from view.

So as you romp around the building, Arkio continuously chooses and assembles the 700 parts into a new mesh, custom-designed for your current viewpoint! It does this so rapidly that you barely notice the sleight of hand, it only takes a few milliseconds to build the next mesh. This includes estimating the visibility of the 700 parts, meaning Arkio figures out how the parts obscure one another as seen from your current viewpoint, which is no mean feat! Arkio uses this visibility information to cull away the outer parts of the building while concentrating geometry detail where you stand inside it and vice versa when you're standing outside it. In effect, Arkio continuously chooses the best subset of triangles to show you at each time, up to the limit of the device it runs on.

The end-result is that Arkio supports individual models of up to 10-20M triangles on Quest 3, a 20-40X improvement over what was possible a year ago. You can even place and move multiple copies of large models in a scene without running out of memory since Arkio knows how to share meshes between model instances. Arkio loads models incrementally as needed so they appear almost instantly. We've played with arranging into a scene a dozen, diverse models totalling almost 40M triangles on a Quest 3.

Here's a view of one copy of the building as seen from the roof of the other:

Admittedly we're seeing some LOD artifacts at this point but we keep in mind that here Arkio is reducing the meshes to around 5% of their original roughly 10 million triangles!

Being able to walk through and review your models in virtual reality is amazing, giving you a chance to experience a building long before breaking ground on it. It's as if you could construct your design as many times as you wanted, each time improving it and fixing mistakes at no cost. Since Arkio is multi-user you can just as easily meet clients and coworkers inside the designed space and have the most effective discussions imaginable, right there inside it! Arkio adapts to the device of each meeting participant, showing more detailed versions of the same model to users on more powerful desktop machines. The large model support of Arkio coupled with its powerful Mixed Reality features is also invaluable during the construction phase since detailed MEP models can be overlaid on the real world and inspected directly for clashes and issues. Whether in a meeting or not you can always sketch Arkio shapes on top of large models, proceeding straight from idea to hands-on exploration.

Since we know you literally can't wait to jump into your models, we're happy to report that Arkio imports and pre-processes LODs quickly using our proprietary high-throughput triangle mesh decimation system. Arkio can chew through up to half a million triangles per second on a modern desktop PC, converting your model files to Arkio's internal compressed LOD representation. Arkio can import models directly into Quest and mobile devices without the use of a PC, although the processing speed and maximum model sizes supported will be somewhat lower. Your models reside completely on-device, they are not processed on any external servers and no network streaming nor connectivity is needed while viewing them in Arkio. Your model files can originate from any design tool that exports or converts to .glTF/.GLB or .OBJ files. The upload of models to Arkio on one or more mobile devices is most conveniently done via the Arkio Cloud.

We hope you'll find Arkio's support for large models useful. It's been quite an interesting technical challenge to implement it and tune it so that it runs well on Meta Quest and other mobile platforms. It already handles most everyday architectural models and we'll keep making it even better and faster.